We have just released the first public version of ReconstructMe! See the download.

We have just released the first public version of ReconstructMe! See the download.

Looking forward to hearing your feedback.

We have just released the first public version of ReconstructMe! See the download.

We have just released the first public version of ReconstructMe! See the download.

Looking forward to hearing your feedback.

Start your engines, connect your Kinect’s, oil your swivel chairs: The ReconstructMe team, powered by PROFACTOR, proudly announces:

On Monday everybody, which probably includes you, will be able to scan and reconstruct the world. ReconstructMe will be free for non-commercial use. Contact us for commercial interests.

Big thanks fly out to our BETA testers that made releasing in time possible! They provided valuable feedback throughout the entire BETA program and without them, we wouldn’t have reached the robustness and usability we have now.

Here’s to our Beta testers!

Here are few words on a new feature we’ve added these days to our main development trunk: Tracking failure detection and recovery. Using that feature the system is capable of detecting various tracking failures and recover from that. Tracking failures occur, for example, when the user points the sensor in a direction that is not being covered by the volume currently reconstructed, or when the sensor is accelerated too fast. Once a tracking error is detected, the system switched to a safety position and requires the user to roughly align viewpoints. It automatically continues from the safety position when the viewpoints are aligned.

Here’s a short video demonstrating the feature at work.

We are considering to integrate this feature into the final beta phase, since stability of the system and its usability increase. Be warned, however there are still cases when the systems fails to track and fails to detect that the tracking was lost, causing the reconstruction to become messy.

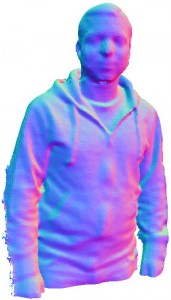

Continuing the scans of the ReconstructMe team, here is Christoph Kopf and Matthias Plasch. Feel free to print away as much as you like ;-) If you do so, please send us pictures!

ckopf by Christoph Kopf is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

ckopf by Christoph Kopf is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License. mplasch by Matthias Plasch is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

mplasch by Matthias Plasch is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.Here’s a list of 3D replications printed by our keen beta testers. Sources are 360° depth streams of team members we posted here. In case you are about to print one of our team members, let me know, so I can update this post.

Derek, one of our enthusiastic users, has taken the time to replicate Martin’s stream using a 3D printer device. There is a write up about the making of on Derek’s blog. Check it out, it’s worth reading!

Here’s is his video capturing the printing sequence

Thanks a lot Derek!

Tony just dropped us a note that he successfully printed Christoph and uploaded the the results to thingiverse. Here is an image of the result

Thanks a lot Tony!

Bruce (3D Printing Systems) replicated all three of. His setup reminds me of bit of Mount Rushmore. Here’s the image

Thanks a lot Bruce!

Our (PROFACTOR) interest in ReconstructMe goes beyond reconstructing 3D models from raw sensor data. The video below shows how to use ReconstructMe to do a stable foreground/background segmentation in 3D. A technique that is often required in 3D vision for pre-processing purposes.

With ReconstructMe you can generate background knowledge by moving the sensor around and thus building a 3D model of the environment. Once you switch from build to track only, ReconstructMe can robustely detect foreground by comparing the environment with the current frame.

This technique can be used for various applications

to name a few.

We have just recorded three of our colleagues and created STL models from them. This time we made a full model of ourselfs by rotating around the camera. One guy was sitting on a chair and rotated around, while the other one moved the Kinect up and down so we could get a full model of the front, back, and also the top. If you own a 3D printer or 3D printing software, we would very much like to know if these models are good enough for 3D printing! Please post any comments here. For everyone who made it on the BETA program, there is also the datastream so you can create the STL model yourself. We have post-processed the STL with Meshlab by re-normalising the normals, and converted them to binary STL.

Continue reading

Yesterday we’ve received a new Asus Xtion PRO Live device and at a first glance it looks really tiny compared to the Microsoft Xbox Kinect. It misses the engine for base tilt and does not need an external power supply. Lenses and hardware setup seems to be equal to Kinect setup, which allows us to use the same default calibration.

Yesterday we’ve received a new Asus Xtion PRO Live device and at a first glance it looks really tiny compared to the Microsoft Xbox Kinect. It misses the engine for base tilt and does not need an external power supply. Lenses and hardware setup seems to be equal to Kinect setup, which allows us to use the same default calibration.

That said, we only needed to install the PrimeSense hardware binaries to get the ASUS device working in ReconstructMe.

ReconstructMe enables you to create a three dimensional virtual model of real world objects or scenes. Therefore, you only have to walk around with a 3D video capture device – like Microsofts new sensor Kinect – and film the object(s) you are interested in. Filming the objects from as much different views as possible will give you a detailed model of the real world object or scene. In case you filmed the scene from 360 degrees, you will also get the virtual scene in 360 degrees. Once the virtual environment model is created, a surface model can be generated.

ReconstructMe consists of three core components. These components are Continue reading

With the new Kinect for Windows to be shipped in February, Microsoft introduces the so called near-mode. There is a good write-up about it at the Microsoft blog. It seems as the hardware setup remains quite the same (lenses, base-offset), but the firmware changes. The near-mode can be activated through a software switch in the Kinect SDK.

I guess this all means that the OpenNI driver, which ReconstructMe is currently based on, won’t be able to switch the near-mode on and off in the near future. However, others have reported that under certain lighting conditions the Kinect is already capable of seeing objects as close as 400mm. A short in-house test revealed that valid z-depth values start as close as 405mm using XBox Kinect and OpenNI drivers.

I think that switching from OpenNI to KinectSDK won’t be a great benefit, as it would rule out other OpenNI compatible devices such as the ASUS Xtion PRO LIVE and would additionally bind ReconstructMe to the windows platform. Rather, we are considering to provide dual driver support.

Update 2012/03/22 As of today we use a dual driver model to support OpenNI and Kinect for Windows.

Hi out there,

we recorded a new video showing the really fast reconstruction of different people in just a few seconds. You also will see the stable camera tracking even although a person walks through the recorded scene. Check out the video!

You can also download the generated model of the last person in the video.

Download as STL model:

{filelink=6}

Original resolution: 75,304 vertices, 2.8MB

Reconstructed-Person by Christoph Kopf is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

Reconstructed-Person by Christoph Kopf is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

Folks,

http://www.reconstructme.net wasn’t reachable for a couple of hours. The problem was due to a power blackout. Sorry for the inconvenience caused.

Best,

Christoph

Hi folks,

we think it is a good idea to split the BETA program into multiple phases. Essentially, we will start with a naked ReconstructMe and add functionality incrementally as we move along. We think that doing it this way will allow us to break down bigger issues into manageable chunks, for which we can hopefully provide fast fixes.

If all goes well, we will end up, as promised, with a ‘full-blown’ ReconstructMe on 28th February. We will start to send out E-Mails to all participants on the 2nd of February. In case you haven’t received one by the 3rd of February, leave a reply below.

Any opinions or remarks?

In case you want to spread the word about ReconstructMe, please go ahead. We currently need as much as public attention as possible. We’ve already submitted links to a couple of platforms including Digg, Reddit, DZone, and Hacker News. Please upvote if you enjoy what we do.

Cheers,

Christoph