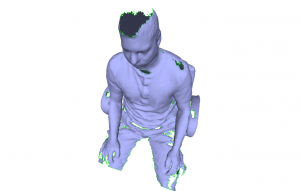

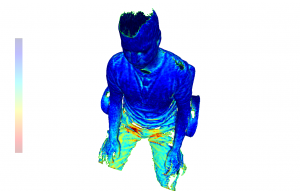

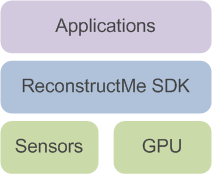

As of today ReconstructMe SDK 1.0 is available for commercial and non-commercial use. ReconstructMe SDK is designed for a broad band of applications. It targets simple real-time reconstruction applications and scales up to multiple sensor projects.

As of today ReconstructMe SDK 1.0 is available for commercial and non-commercial use. ReconstructMe SDK is designed for a broad band of applications. It targets simple real-time reconstruction applications and scales up to multiple sensor projects.

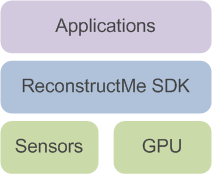

Design

We spent a lot of time in designing the API as we wanted it to be as easy-to-use as possible without limiting the possible flexibility. After dozens of approaches and evolutionary prototypes, we’ve settled with a design that we think accomplishes the following main goals

- easy-to-use A generic and consistent API allows you grasp the concepts quickly and develop your first reconstruction application within minutes.

- easy-to-integrate The API comes as a pure C-based implementation without additional compile time dependencies. Interopability with other programming languages is easly possible.

- high-performance The SDK is designed to provide a maximum performance for a smooth reconstruction experience.

Real-time 3D reconstruction is complex. We tried to hide as many details as possible about the process to allow you to concentrate on application programming.

Availability

The ReconstructMe SDK is available for non-commercial usage (with some limitations) and commercial usage. Both feature the same functionality, but the non-commercial version is limited in some aspects. See our project page for details. The package comes bundled with an installer that allows you to install the necessary sensor drivers on the fly (note that the drivers are not included, but downloaded remotely from our site).

ReconstructMe SDK is currently compiled for Windows 32bit using Visual Studio 10.

Interoperability

Getting reconstructme to work in other programming languages is easy. We added an example to demonstrate the case for C#. We’d like to add binding for all common languages, but obviously that is a task that requires the help of the community. If you’d like to contribute to a binding drop us a note in our development forum.

Example

Here’s a very first introductory example into the C-API copied from the reference documentation. The example shows how to perform real-time reconstruction using a single sensor. Besides, built-in real-time visualization and surface visualization is used. The final mesh is saved in PLY format.

Here’s the corresponding code

2 | #include <reconstructmesdk/reme.h> |

3 | reme_context_create(&c); |

6 | reme_context_compile(c); |

10 | reme_volume_create(c, &v); |

16 | reme_sensor_create(c, "openni;mskinect;file", true, &s); |

17 | reme_sensor_open(c, s); |

23 | reme_viewer_create_image(c, "This is ReconstructMe SDK", &viewer); |

24 | reme_viewer_add_image(c, viewer, s, REME_IMAGE_DEPTH); |

25 | reme_viewer_add_image(c, viewer, s, REME_IMAGE_VOLUME); |

29 | while (time < 200 && REME_SUCCESS(reme_sensor_grab(c, s))) { |

32 | reme_sensor_prepare_images(c, s); |

37 | if (REME_SUCCESS(reme_sensor_track_position(c, s))) { |

41 | reme_sensor_update_volume(c, s); |

46 | reme_viewer_update(c, viewer); |

51 | reme_sensor_close(c, s); |

52 | reme_sensor_destroy(c, &s); |

56 | reme_surface_create(c, &m); |

57 | reme_surface_generate(c, m, v); |

58 | reme_surface_save_to_file(c, m, "test.ply"); |

61 | reme_viewer_t viewer_surface; |

62 | reme_viewer_create_surface(c, m, "This is ReconstructMeSDK", &viewer_surface); |

63 | reme_viewer_wait(c, viewer_surface); |

65 | reme_surface_destroy(c, &m); |

68 | reme_context_destroy(&c); |

For more examples please visit the documentations’ example page.

Happy reconstruction!

The ReconstructMe Team.

As of today

As of today