Nikolas Früh dropped us a note that he finished his Master’s thesis. His thesis is all about measuring the building progress and incorporating that data into various processes. It is written in German language an contains around 15 pages dealing with ReconstructMe. ReconstructMe is compared to competitors in terms of usage and accuracy. Nikolas was so kind to allow us to publicly link his thesis for interested users. You can find it on the references page.

Nikolas Früh dropped us a note that he finished his Master’s thesis. His thesis is all about measuring the building progress and incorporating that data into various processes. It is written in German language an contains around 15 pages dealing with ReconstructMe. ReconstructMe is compared to competitors in terms of usage and accuracy. Nikolas was so kind to allow us to publicly link his thesis for interested users. You can find it on the references page.

Long Night of Research

The long night of research (in German “Lange Nacht der Forschung”) on Friday last week was a great success for PROFACTOR and ReconstructMe. We had around 300 people visiting and exploring our robotics, nano-tech and chemistry labs.

At the ReconstructMe booth we had a lot of fun reconstructing our visitors and enjoyed the in-depth discussions with potential users and developers. What really surprised us was how quickly people learnt to use this new technology to scan their family members.

We used the swivel armchair setup to speed up the reconstruction process and provided each visitor a personalized 3DPDF containing an embedding of their reconstruction. Here are two such samples: one, two (Acrobot Reader required to view the embedding)

Here’s a video made at the event

Pictures by CityFoto.

ReconstructMe – Reconstruct Your World

We’ve done another quick video today, showing some of the advances of ReconstructMe in the past month.

This videos shows how easily it is to model your environment in 3D with ReconstructMe. First we let a friend spin on a swivel armchair while moving the sensor around to change viewpoints. Then we model our office within seconds using ReconstructMe.

The left side shows how the reconstruction grows from a fixed perspective as the camera is moved. The right view shows the reconstruction from the current camera perspective. When entering the pause mode ReconstructMe instantly switches to an interactive 3D preview of the result.

Scan of a Nissan Qashqai using Volume Stitching

A few days ago we tested our new feature volume stitching which enables the rough alignment of multiple volumes. We did a quick scan of a car using 1.5m sized volumes. What you can see below is the raw output of ReconstructMe without any filtering or post processing, except slightly repositioning of one of the volumes. We made up a promo video demonstrating the process and the results:

As you can see, ReconstructMe is running on a laptop and we are using the Asus Xtion Pro Live for better mobility and flexibility in scanning. We have a quite big release in the queue featuring among other things

- Volume stitching

- 3D Surface preview

- PLY file support

Stay tuned.

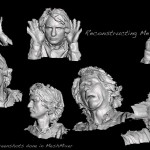

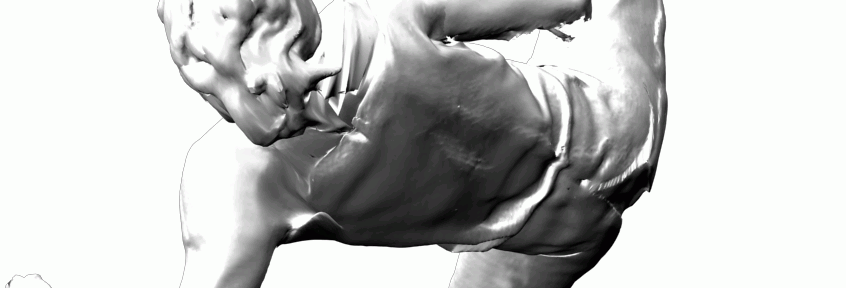

Gallery Update – ReconstructMe + ZBrush

Skaale sent us the following reconstruction of Tine. Glasses where used to increase the resolution, which probably required manual stitching of the individual views.

Here’s a turntable animation of the final result

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

ReconstructMe 0.4.0-278 Released

We continue to release after each development sprint. So here is our new release with three new features.

- Added individual resolutions per dimension.

- Automatic correction of integration truncation when value is too low.

- Added

gradient_step_factparameter to control the smoothness of neighboring normals.

Adjusting resolutions per dimension will allow you to add more detail into dimension that require it (think about relief scans or similar non-square volumes). To adjust the settings look at the packaged configuration files that ship with ReconstructMe. Backward compatibility to older configuration files is broken, please update accordingly.

The second feature allows to detect misconfigurations of the integrate_truncation parameter. This parameter depends on the volume size and resolution. If a value below the minimum is detected, the truncation is clamped to the minimum value.

Below is screenshot of a high resolution keyboard and hand scan.

Here’s the configuration

camera_size_x: 640

camera_size_y: 480

camera_fx: 571.26

camera_fy: 571.26

camera_px: 320

camera_py: 240

camera_near: 100

camera_far: 2000

volume_size {

x: 1024

y: 1024

z: 128

}

volume_min {

x: -250

y: -250

z: 400

}

volume_max {

x: 250

y: 250

z: 600

}

integrate_truncation: 5

integrate_max_weight: 64

icp_max_iter: 20

icp_max_dist2: 200

icp_min_cos_angle: 0.9

smooth_normals: false

disable_optimizations: false

extract_step_fact: 0.5

gradient_step_fact: 0.5

ReconstructMe 0.4.0-269 Released

Here’s the changelog

- Added

--calibratecommand to perform intrinsic depth calibation. - Fixed repairing of surface borders when points are outside of volume.

Make sure to read the calibration section on the usage page.

ReconstructMe with Glasses

Inspired by ideas of MagWeb, Tony Buser has done an awesome glasses mod with the Kinect and ReconstructMe. He got +2.5 reading glasses and fixed them in front of the kinect. It is a bit difficult to get a complete scan with these as the image is distored, but the result looks really excellent:

The mod looks really cool, I am amazed that this actually works… It definitely shows that adding a lens to the Kinect might be a good solution to be able to scan small parts. The difficult part will be to provide a calibration method that can successfully undistort the data.

Here are the settings Tony Buser has used for this scan:

camera_size_x: 640

camera_size_y: 480

camera_fx: 514.16

camera_fy: 514.16

camera_px: 320

camera_py: 240

camera_near: 100

camera_far: 2000

volume_size: 512

volume_min {

x: -250

y: -250

z: 400

}

volume_max {

x: 250

y: 250

z: 900

}

integrate_truncation: 10

integrate_max_weight: 64

icp_max_iter: 20

icp_max_dist2: 200

icp_min_cos_angle: 0.9

smooth_normals: false

disable_optimizations: false

extract_step_fact: 0.5

Gallery Update – Mirrors

MagWeb uses mirrors to cut down reconstruction time to a third when compared to a standard walk-around turn. Here are a couple of images.

And a turntable video of the result.

In case you like to know more about how to do that, join our discussion.

Gallery Update – Bags

Refael sent us these nicely rendered open-bags scanned with ReconstructMe and rendered with Mantra.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

ReconstructMe 0.4.0-255 released

We have just released ReconstructMe 0.4.0-255 that contains Microsoft Kinect for Windows and Near Mode support. Here are the details

We have just released ReconstructMe 0.4.0-255 that contains Microsoft Kinect for Windows and Near Mode support. Here are the details

- Support for Microsoft Kinect for Windows added.

- Fixed and extended batch starters.

- First version to build against Microsoft Visual Studio 10.

The installation procedure and usage have been updated. Especially you will need to update the C++ redistributables.

ReconstructMe now supports at least 4 different sensors.

- Microsoft Kinect for Windows

- Microsoft Kinect for XBox

- Asus Xtion Pro Live (RGB and Depth)

- Asus Xtion Pro (Depth only)

Scan of a Fiat 500 Cabrio

MagWeb, has done another incredible stunning scan an open-top Fiat 500C. Here’s a video of a turntable animation of his results

MagWeb will post images later on in the newsgroup and we intend to provide the resulting CAD model, once we have resolved legal questions.

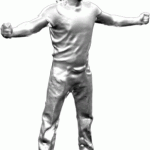

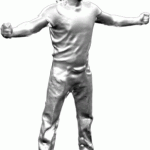

Character Creation with a ReconstructMe Scan

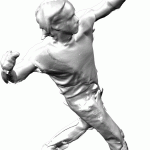

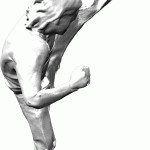

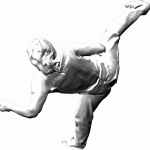

We have created a full body scan one of our coworkers while using a bigger volume, and he used this as the basis for a character animation.

- The most difficult part of the scanning process was to stand still and not move the arms. We solved this problem by letting the model hold a broomstick in each hand :) This data was later removed from the CAD scan.

- To animate the skin of the character a biped system from 3Ds Max was used.

- Finally, With Motion Mixer from 3Ds Max, several BIP files were loaded to affect biped motion.

The result looks quite stunning! Here is a video with the result:

Here are some more screenshots:

ReconstructMe 0.4.0-231 Released

ReconstructMe 0.4.0-231 is a minor bug-fix release. Get the changelog here http://reconstructme.net/releases/

ReconstructMe 0.4.0-231 is a minor bug-fix release. Get the changelog here http://reconstructme.net/releases/

Zoom Lense Results

MagWeb modded his Kinect with 2.5+ glasses (zoom-lens) to increase the resolution in near range. He applied a custom depth calibration and was able to reconstruct nearly 360°. Here are some early results.

More information can be found in the corresponding newsgroup thread.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License.