We are proud to announce that ReconstructMe teamed-up with CANDELOR, our in-house solution for robust and fast object localization, to significantly improve the tracking experience of ReconstructMe.

We are proud to announce that ReconstructMe teamed-up with CANDELOR, our in-house solution for robust and fast object localization, to significantly improve the tracking experience of ReconstructMe.

The integration of CANDELOR allows us to greatly improve the following aspects of ReconstructMe

Recovery of Camera Tracking

Until now, ReconstructMe assumed that the camera movement between subsequent frames is rather small. Violating this constraint threw ReconstructMe off the track and a manual re-location required the user to position the camera very closely to the last recovery position. While this mode works most of the time it can be tedious to find the correct position manually.

With CANDELOR we can weaken this requirement as its algorithms allow us to determine the correct camera movement for large displacements. This is possible as CANDELOR searches for similar features in the 3D data of the recovery position and the current sensor frame. Given a set of corresponding features a transform can be estimated that reflects the searched for camera movement.

The video below shows tracking with CANDELOR enabled and directly compares tracking performance with and without CANDELOR enabled reconstruction.

As one can see in the video recording of data is paused multiple times and the resume position is far off from the paused position. Despite the displacement of the camera, tracking is successfully recovered by CANDELOR.

Automate extrinsic calibration of multiple sensors

A nice benefit of using CANDELOR is that it now has become very easy to use multiple sensors working on the same volume. In traditional multi-sensor applications one requires a good estimate of the so called extrinsic calibration. That is the transformation between two cameras. This extrinsic calibration is often assumed to be fixed and not allowed to change.

ReconstructMe works differently. The initial extrinsic calibration of multiple cameras is automatically calculated by CANDELOR. Once both sensors have registered you can freely move the cameras into different locations. They will maintain calibrated via the data you record. The unique advantage is that multiple sensors can scan more quickly (divide and conquer).

In the following video you can see Christoph and me scanning a person using multiple cameras that work on the same volume.

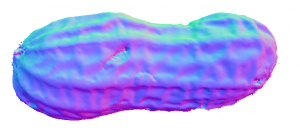

Point-and-shoot 3D object reconstruction

We’ve added a new reconstruction mode based on global tracking: Point-and-shoot. It means that reconstruction of objects is performed only at specific manually picked locations. Especially for users with low-powered GPU/CPUs will benefit from this mode as it allows you to capture an object with very few positions. The video below shows how it works.

Despite the fact that only few positions are used for data generation, the model looks quite smoothed and closed.

We are confident that the new features will ship with the upcoming SDK and Qt within the next two weeks.

Happy reconstruction!

The ReconstructMe-Team

We’ve just released

We’ve just released